Frequently Asked Question

Storage: QNAP Storage Performance Best Practice

Last Updated 10 years ago

This best practice guide provides general recommendation for configuring QNAP NAS storage systems for best performance.

The most common performance characteristics measured are sequential and random operations. We measure disk performance in IOPS or Input/Output per second. One read request or one write request = 1 IOPS. Each disk in you storage system can provide a certain amount of IOPS based off of the rotational speed, average latency and average seek time.

* Performance depends on SSD controller chip and flash cell

To do a basic RAW IOPS calculation for 4 hard drives at 7,200 RPM, we can assume the total RAW IOPS are 500 IOPS. We can calculate this by taking the total number of drives multiply by the number of RAW IOPS for each drive (4 HDD x 125 IOP = 500 IOPS).

Work load pattern that are mostly random access.

Work load pattern that are mostly sequential access.

Due to the nature of mechanical drives, accessing/writing data sequentially is much faster than accessing/writing it randomly because of the way in which the disk hardware works. Sequential I/O on mechanical drives can generally be served at a higher throughput because there is less seek operation by the disk head and at the same time larger data segment can be read/written during a single platter rotation.

Random access involves a higher number of seek operations, which means random read and especially random write will deliver a lower rate of throughput and IOPS. During random I/O, the position of the disk head, seek disk rotational delay, and seek time will cause significant performance penalty.

For mechanical disk-based systems, where each disk seek will take around 10ms. Sequentially writing data to that same disk takes about 30 ms per MB. So if you sequentially write 100 MB of data to a disk, it will take around 3 seconds. But if you do 100 random writes of 1MB each, that will take a total of 4 seconds (3 seconds for the actual writing, and 10 ms * 100 = 1 second for all the seeking).

QNAP SSD Read/Write caching feature helps random IOPS performance by re-sorting (reducing write) block addresses in cache to reduce load on back-end disks.

Cache size impact, larger the better (to create more opportunities for re-sorting), but practically limited by cost because write caches are far more expensive than back-end disk.

When trying to determine which type of RAID to use when building a storage solution, it usually depends on two things. Capacity and Performance.

RAID 10:

Works best for heavy transactional work loads with high random (greater than 30%) writes.

RAID 5:

Works best for medium performance, general purpose and sequential work loads. Normally RAID 5 will be use because it is a more economical choice, with only 1 drive being used for parity. For performance demanding applications, RAID 5 is not the best choice.

RAID 6:

Works best for read biased work loads such as archiving and backup purposes, but not the best choice for performance demanding applications, especially for a random write intensive environment.

TS-EC1680U-RP

16 x 6 TB SATA hard drives

A customer has a IT environment with two Vmware ESXi 6.0 host servers, it is cluster, and needs to run 20 VMs using the QNAP NAS as the storage back-end. The customer surveyed and took a inventory of all his VMs and found the following work load pattern in his environment.

1 VM --> High load MSSQL database server (30%+ Random I/O).

3 VM --> High load Application servers (30%+ Random I/O).

1 VM --> Low load vCenter services (Mostly Sequential I/O).

1 VM --> Low load Domain Controller (Mostly Sequential I/O).

1 VM --> Low load Backup server (Mostly Sequential I/O).

2 VM --> Low load DNS services (Mostly Sequential I/O).

2 VM --> Medium load Web Servers (15% Random I/O).

5 VM --> General user virtual desktops.

4 VM --> Internal development servers.

Recommended Configuration:

TS-EC1680U-RP

16 x 1 TB SATA SSD drives

Customer primary concern is getting highest possible storage performance and storage capacity is not the main concern.

Recommended Configuration:

Assumed equipment used:

2 x TS-EC1680U-RP

16 x 6 TB SATA hard drives

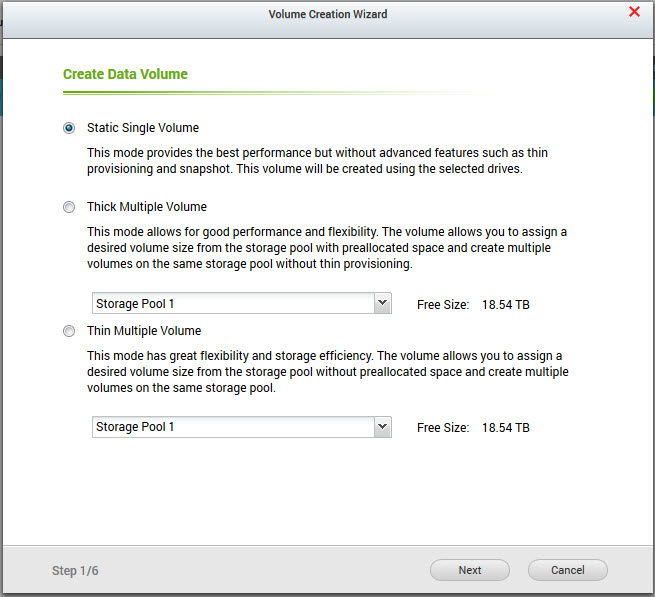

Once a Storage Pool has been created, you can choose from three different methods to create your volume on top of the pool. The type of volume you want to create depends on whether you want flexibility or performance.

Purpose:

This best practice is intended for QNAP users, partners, and customers who are considering using QNAP NAS systems. In this best practice we will provide recommendations on how to configure the QNAP storage for optimal performance depending on your work load.How to achieve the best possible storage performance:

The guide line below introduces specific configuration recommendations that enable good performance from a QNAP storage system.- Choose a appropriate enterprise QNAP NAS storage. Higher end enterprise platform is equipped with faster CPU, Memory, and IO specification.

- Max out system RAM on the storage.

- Use mSATA and SSD drives for Read/Write caching available on QTS 4.2.0, using 2 or 4 SSD and create RAID 1 or 10 as the caching pool. See Fig. 1 & Fig. 2

- Choose the right configuration for RAID and Volume.

- Create a RAID 10 Storage Pool. See Appendix for explanation.

- Use "Static Volume" for best performance or use "Thick Volume" under storage pool.

- P.S. "Thin Volume" option provides better flexibility, but may decrease storage performance for sensitive application. See example in Fig. 4 and Appendix for explanation.

Fig. 1 SSD Read/Write Cache

Fig. 2 SSD Caching Pool

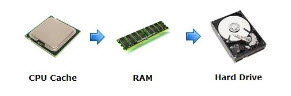

* An example on how SSD cache pool is accessed within QNAP NAS.How to choose your storage media:

Essential guide lines on choosing the right type of media for your QNAP NAS storage. Match the appropriate drive type to the expected workload within your environment.| Features | Traditional Hard Drives | SAS Hard Drives | SSD Hard Drives |

|---|---|---|---|

| Cost | Low | Medium | High |

| Performance | Low | Medium | High |

| Capacity | High | Medium | Low |

General hard drive IOPS characteristics

| Device | Type | IOPS | Interface |

|---|---|---|---|

| 5400 RPM Drives | HDD | ~75-100 IOPS | SATA III |

| 7200 RPM Drives | HDD | ~125-150 IOPS | SATA III |

| 10,000 RPM Drives | HDD | ~140 IOPS | SAS |

| 15,000 RPM Drives | HDD | ~175-210 IOPS | SAS |

| SSD Drives | SSD | ~40K-100K+ IOPS* | SATA III |

To do a basic RAW IOPS calculation for 4 hard drives at 7,200 RPM, we can assume the total RAW IOPS are 500 IOPS. We can calculate this by taking the total number of drives multiply by the number of RAW IOPS for each drive (4 HDD x 125 IOP = 500 IOPS).

Random Access:

Random access means you can get any part of the file in any order. So for example, you can read the middle part before the start.Work load pattern that are mostly random access.

- Multiple clients concurrent access

- Database application

- VM access in a hyper-visor environment

- IP-SAN using block based data

Sequential Access:

Sequential means you must first read the first part of the file, before reading second, then third etc..Work load pattern that are mostly sequential access.

- Video editing (Direct editing by using video editing software from a single work station)

- Video recording (Single client, e.g. from a IP camera or video recorder)

- Video streaming (Watching a video from the NAS)

- Large file transfer

- Data backup task

Random v.s. Sequential access:

The seek operation, which occurs when the disk head positions itself at the right disk cylinder to access the requested data, takes more time than any other part of the I/O process.Due to the nature of mechanical drives, accessing/writing data sequentially is much faster than accessing/writing it randomly because of the way in which the disk hardware works. Sequential I/O on mechanical drives can generally be served at a higher throughput because there is less seek operation by the disk head and at the same time larger data segment can be read/written during a single platter rotation.

Random access involves a higher number of seek operations, which means random read and especially random write will deliver a lower rate of throughput and IOPS. During random I/O, the position of the disk head, seek disk rotational delay, and seek time will cause significant performance penalty.

Fig. 3

ExampleFor mechanical disk-based systems, where each disk seek will take around 10ms. Sequentially writing data to that same disk takes about 30 ms per MB. So if you sequentially write 100 MB of data to a disk, it will take around 3 seconds. But if you do 100 random writes of 1MB each, that will take a total of 4 seconds (3 seconds for the actual writing, and 10 ms * 100 = 1 second for all the seeking).

Why Flash (SSD) Read/Write caching improves random IOPS

Since flash drives do not have a physical disk head that must move around, there is no 10 ms seek time penalty that comes from a mechanical disk.QNAP SSD Read/Write caching feature helps random IOPS performance by re-sorting (reducing write) block addresses in cache to reduce load on back-end disks.

Cache size impact, larger the better (to create more opportunities for re-sorting), but practically limited by cost because write caches are far more expensive than back-end disk.

RAID Characteristics

QNAP NAS storage supports different types of RAID Level, each RAID Level have different capacity and performance metrics. Before deploying your storage, understand what kind of work load your storage will be expected to perform.When trying to determine which type of RAID to use when building a storage solution, it usually depends on two things. Capacity and Performance.

| RAID Type | Minimum # Drives | Fault Tolerance | Capacity | Random Read | Random Write | Sequential Read | Sequential Write |

|---|---|---|---|---|---|---|---|

| RAID 0 | 2 | None | 100% | High | High | High | High |

| RAID 1 | 2 | 1 Disk failure | 50% | High | Low | High | Good |

| RAID 5 | 3 | 1 Disk failure | N - 1 | High | Low | High | Good |

| RAID 6 | 4 | 2 Disk failure | N - 2 | High | Low | High | Good |

| RAID 10 | 4 | 1 Disk failure in each sub RAID | 50% | High | Good | High | Good |

Works best for heavy transactional work loads with high random (greater than 30%) writes.

RAID 5:

Works best for medium performance, general purpose and sequential work loads. Normally RAID 5 will be use because it is a more economical choice, with only 1 drive being used for parity. For performance demanding applications, RAID 5 is not the best choice.

RAID 6:

Works best for read biased work loads such as archiving and backup purposes, but not the best choice for performance demanding applications, especially for a random write intensive environment.

Case Study 1: Identify your work load pattern

Separating VMs with different IOPS patterns into multiple Storage Pool with different RAID characteristic will significantly improve performance and reduce I/O bottle neck.

Assumed equipment used:TS-EC1680U-RP

16 x 6 TB SATA hard drives

A customer has a IT environment with two Vmware ESXi 6.0 host servers, it is cluster, and needs to run 20 VMs using the QNAP NAS as the storage back-end. The customer surveyed and took a inventory of all his VMs and found the following work load pattern in his environment.

1 VM --> High load MSSQL database server (30%+ Random I/O).

3 VM --> High load Application servers (30%+ Random I/O).

1 VM --> Low load vCenter services (Mostly Sequential I/O).

1 VM --> Low load Domain Controller (Mostly Sequential I/O).

1 VM --> Low load Backup server (Mostly Sequential I/O).

2 VM --> Low load DNS services (Mostly Sequential I/O).

2 VM --> Medium load Web Servers (15% Random I/O).

5 VM --> General user virtual desktops.

4 VM --> Internal development servers.

Recommended Configuration:

- Create a RAID 10 Storage Pool 1 with Static volume using 8 or more hard drives.

- Place the 4 high load VMs (30% Random I/O) on the high performance RAID 10.

- Create a RAID 6 Storage Pool 2 with Thick or Static volume using 4 or more hard drives.

- Place the 5 Low load VMs (Mostly Sequential I/O) on this RAID 6.

- Create a RAID 6 Storage Pool 3 with Thick or Static volume using 4 or more hard drives.

- Place the rest of your VMs, Web Servers, user virtual desktops, and internal development servers on this RAID 6.

- Create a RAID 10 Storage Pool 1 with Static volume using 4 or more SSD drives.

- Place all High load and medium load VMs onto SSD drives.

- Create a RAID 6 Storage Pool 2 with Thick or Static volume using 8 or more hard drives.

- Place the rest of your VMs (Mostly Sequential I/O) on this RAID 6.

Case Study 2: Highest IOPS possible performance

Use high performance SSD drives to get the best result.

Assumed equipment used:TS-EC1680U-RP

16 x 1 TB SATA SSD drives

Customer primary concern is getting highest possible storage performance and storage capacity is not the main concern.

- Create a RAID 10 Storage Pool 1 with Static volume using all available SSD drives. Since SSD drives do not have any moving mechanical components, very high sequential and random IOPS can be achieve.

Case Study 3: Multi-Client File Access

Multiple simultaneous Read/Write to the storage means more random IOPS.

A customer has a graphic render farm with about 50 Nodes. All 50 Nodes will simultaneously read the source media library from the storage, render the data and then write the result back onto the storage for further processing. Since all 50 Nodes first read the media and then write the result back to the storage concurrently, this created a major random IOPS bottle neck using all mechanical drives. Since both high performance and large storage capacity is require for the graphic render process, we can optimize the QNAP NAS storage the following way.Recommended Configuration:

Assumed equipment used:

2 x TS-EC1680U-RP

16 x 6 TB SATA hard drives

- Create a RAID 10 Storage Pool 1 with Thick or Static volume using 10 or more drives.

- Write all the result onto this Storage Pool to take advantage of the RAID 10 characteristics.

- Create a RAID 5 or 6 Storage Pool 2 with Thick or Static volume using 10 or more drives.

- Read all the data from this Storage Pool.

- Populate a QNAP NAS 1 with all SSD drives and create a sinle large Storage Pool 1.

- Write all the result onto this NAS, due to the nature of concurrent writes, the results should be consider random IOPS.

- Populate a second QNAP NAS 2 with all mechanical drives and create a large Storage Pool 1.

- Read all data from this NAS.

APPENDIX:

QNAP NAS Appliance uses advanced Storage Pool technology to offer users both flexibility and performance. Fig. 4 Storage Pool

Storage Pool using LVM

You can use QNAP flexible volume management to better manage your storage capacity. The storage pool aggregates hard drives into a bigger storage space, and with the ability to support multiple RAID groups, the storage pool can offer more redundant protection and reduce risk of data crash.Once a Storage Pool has been created, you can choose from three different methods to create your volume on top of the pool. The type of volume you want to create depends on whether you want flexibility or performance.

Static Volume:

Static volume will take up all of available space within the storage pool, it pre-allocate and pre-prepare the space for optimal read / write access. Since Static Volume takes up all of the Storage Pool space, you cannot create multiple multiple volume within the same pool.Thick Volume:

Thick Volume offers a combination of space flexibility and performance, you can choose how much space to assign to a Thick Volume from the Storage Pool. This means you can create multiple Thick or Thin volume within the same Storage Pool. Once the desire Thick Volume size has been chosen, it pre-allocate and pre-prepare the space for read / write access.Thin Volume:

Thin provisioning allows storage space to be used more flexibly. Thin Volume does not actually use physical storage space during volume creation, rather physical space is only use during write allocation. This means you can provision a Thin Volume size that is larger than your physical storage size. You can create multiple Thin volume within the same storage pool. Due to Thin Volume space flexibility, there is a performance impact during work load.mSATA and SSD Read/Write Cache Acceleration:

Solid state drive (SSD) cache technology is based on disk I/O reading caches. When the applications of the Turbo NAS access the hard drive(s), the data will be stored in the SSD. When the same data are accessed by the applications again, they will be read/write from the SSD cache instead of the hard drive(s). The commonly accessed data are stored in the SSD cache. The hard drive(s) will only be accessed when the data could not be found from the SSD Traditional data access method

SSD cache data access method

GTLv151001